1 - The science we are going to do!

2 - The long road of rejections (and necessary improvements) leading up to this (persistence pays off)

3 - How grateful I am to the many, many people who were involved along the way.

I'm going to convey these in opposite order...

3 - Gratitude:

It wasn't just me working on my own... on the contrary, this was a process that began during my scientific training where my mentors let me read/edit their grants, read/edit manuscripts, and yes, write my own. It was gracious colleagues reading my drafts, editing my aims, discussing the science with me. It was people betting on me by giving me a job and startup... joining our lab team before we were funded. It was family and friends listening to me rant after rejections. And of course, scientific collaborators working as hard and long as I did (looking at you Andriy). So, first order of business: thanks!

2 - Long and winding (painful) road.

This particular grant, which I will describe in the next section, was not, by any means, funded on the first try. In fact, it took about 4 years... and 6 submissions.

Three R21s: one scored (*8*, 4, 3), a resubmission not discussed, and a new submission after that not discussed.

Then three submissions of R01s: 21% (3, 4, 5), 17% (3, 4, 4) and finally the awarded grant (which was 10% (2, 2, 4)).

Some takeaway thoughts about this.

Many of the ideas were the same (not all) -- those which the study section liked, I kept -- those they didn't, I rethought. For the most part, while I was frustrated and sometimes even angry at my rejections, but upon further reflection I usually found that their opinions were pretty well founded.

In hindsight, I'm also sort of amazed how immature the ideas I originally submitted were. While the ideas were sound (for the most part), the data wasn't there to support any of my claims. Here is an example from the first R21 submission in 2016, side by side with one from the funded R01 in 2019 (almost 2020). You can see on the left some 'background' where I suggest the experiment I WANT to do... then, we did it, and analyzed it, and published it (1.5 years later), and it became a subpanel of 'previous work' in the funded proposal (right).

|

| Left: an idea of what a collateraa sensitivity matrix MIGHT look like after the experiments I posed (yes, i made that with powerpoint). Right (panel A), what it looks like now as preliminary data... and B-F some more interesting observations from those (now published) experiments -- see Dhawan et al. |

Another interesting tidbit. After getting back my first R01 score (21%), I was able to take advantage of the 'quick turnaround resubmit' for ESIs (now defunct), so only had a few weeks to get it back in. This meant that I could only really address small issues the reviewers had. In this case, I was able to add a co-authored publication with a new method for comparing in vivo growth curves, but not much else except a small in vivo experiment Andriy has performed (which has since been expanded into a whole paper on its own showing the graduality of the evolution of resistance -- which in turned has spawned a new grant -- this one still in the infant stages of development). Changing out the aim that was the big sticking point wasn't possible though on the short timescale. I was frustrated by the result (but maybe shouldn't have been surprised), and got a 17% on the A1.

17% is pretty far outside the funding range, but the PO was very supportive, and presented it for exception. At the end, it wasn't funded, but I was very encouraged by the support. TALK TO YOUR PROGRAM OFFICERS!

So, with more time, and the new summary statement, I was able to thoughtfully rewrite a new A0, which included two new publications (the EGT one from above, and our collateral sensitivity/experimental evolution work in E. coli, both of which I've blogged about here before (road to measuring evolutionary game in cancer and Antibiotic collateral sensitivity is contingent on the repeatability of evolution). Cynically, one could say it was the two 'shiny' journals we published in that put us over the edge, which is likely in part true, but I think it was much more the honing of the science and grantspersonship that we managed.

Anyways, happy to share any/all of the grants/summary statements if you want to learn from my mistakes, just shoot me an email.

Right. Now the science (in blog/tweetorial format - which I will cross-post on the mathoncoblog):

my third attempt at this R01 submission that went to MABS - scores went A0: 21%, A1: 17%, rewritten new A0: 10% (funded through magic of ESI thank you @NIHFunding) #persistence

we are excited to study the evolution of TKI resistance in lungcancer - through three specific aims:

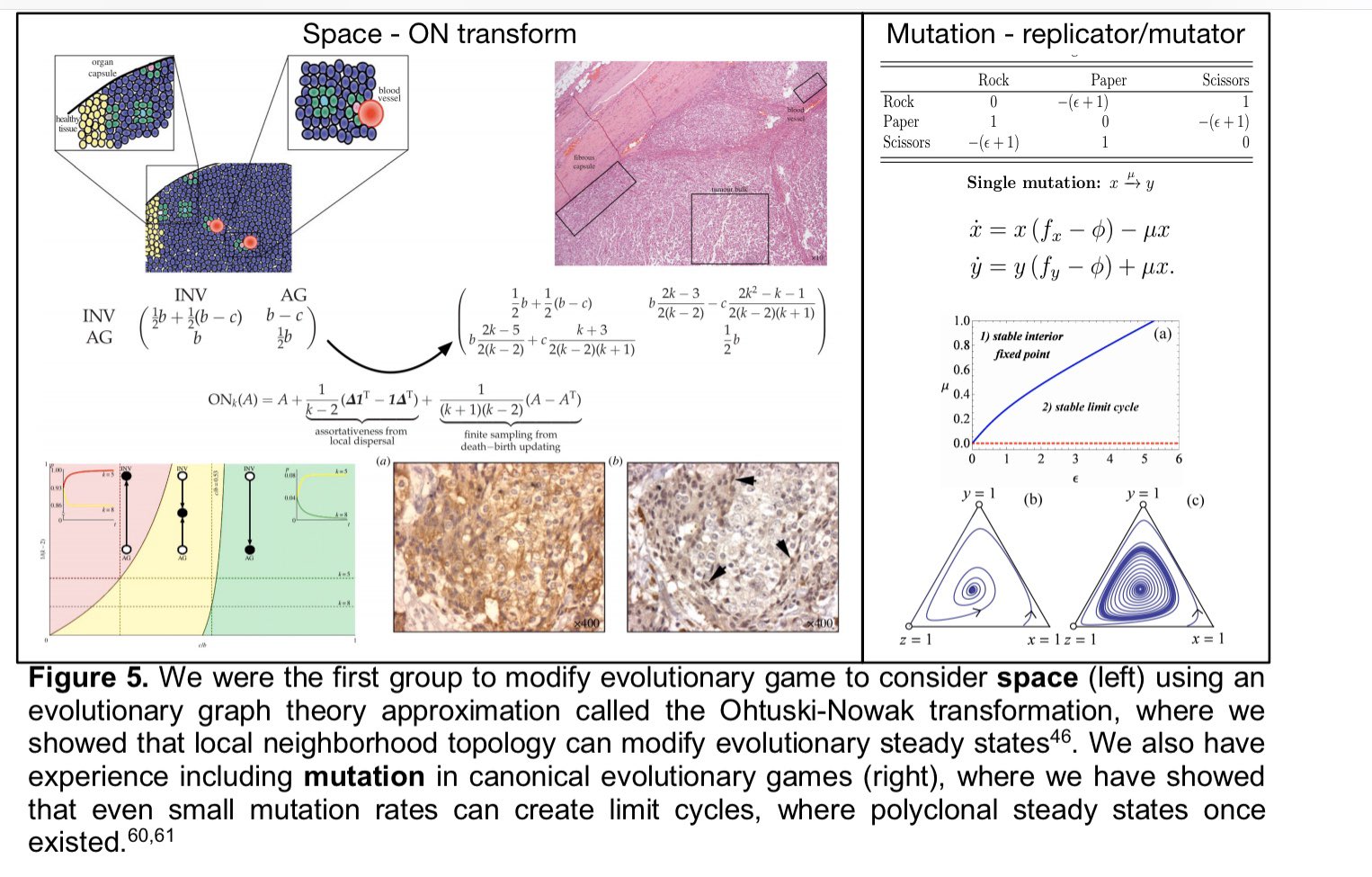

Together with @AndriyMarusyk our first aim will be to see if we can use @kaznatcheev‘s evolutionary game assay in a pair wise fashion to predict three strategy dynamics in vitro. (n.b. I didn't manage to get a fundable score until after that paper was published... even with the whole paper on biorxiv for over a year...)

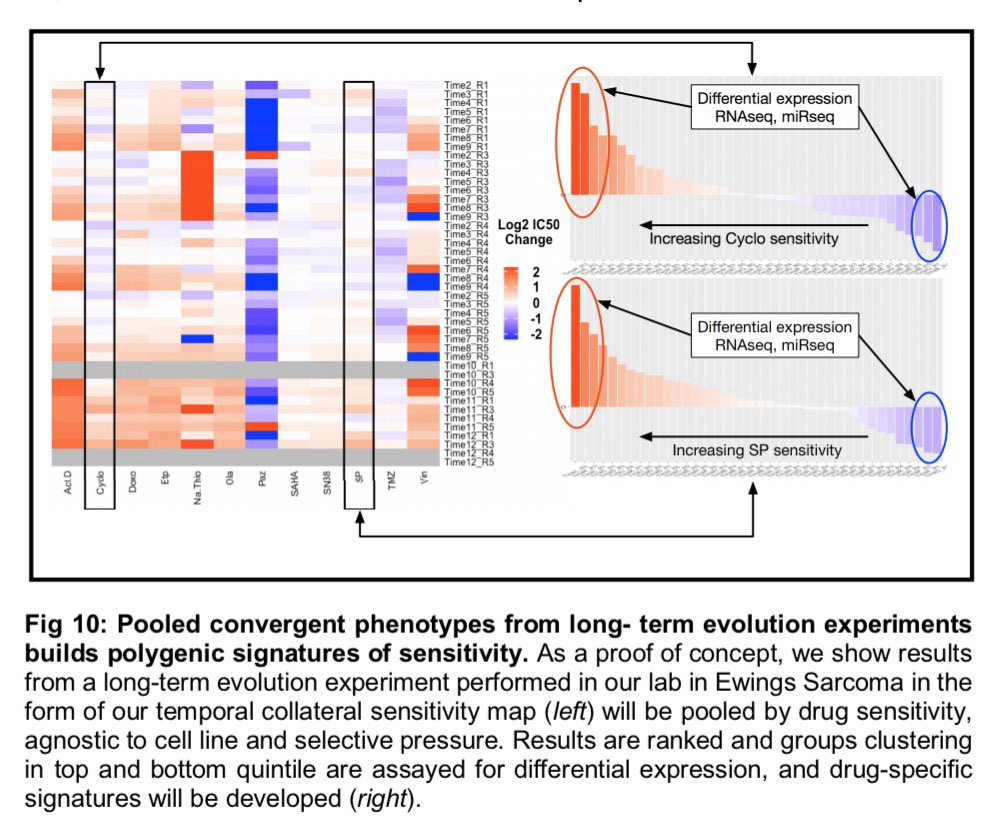

2nd aim will be to study commonalities in drug sensitivity/resistance - over a long (well - longish, not @RELenski long) term evolution experiment - how do sensitivities change and can we ID them? This will support super ⭐️ @CWRUSOM MSTP @ScarboroughJess -w/ help from @n8pennell. (this aim supported by our own work in E. coli).

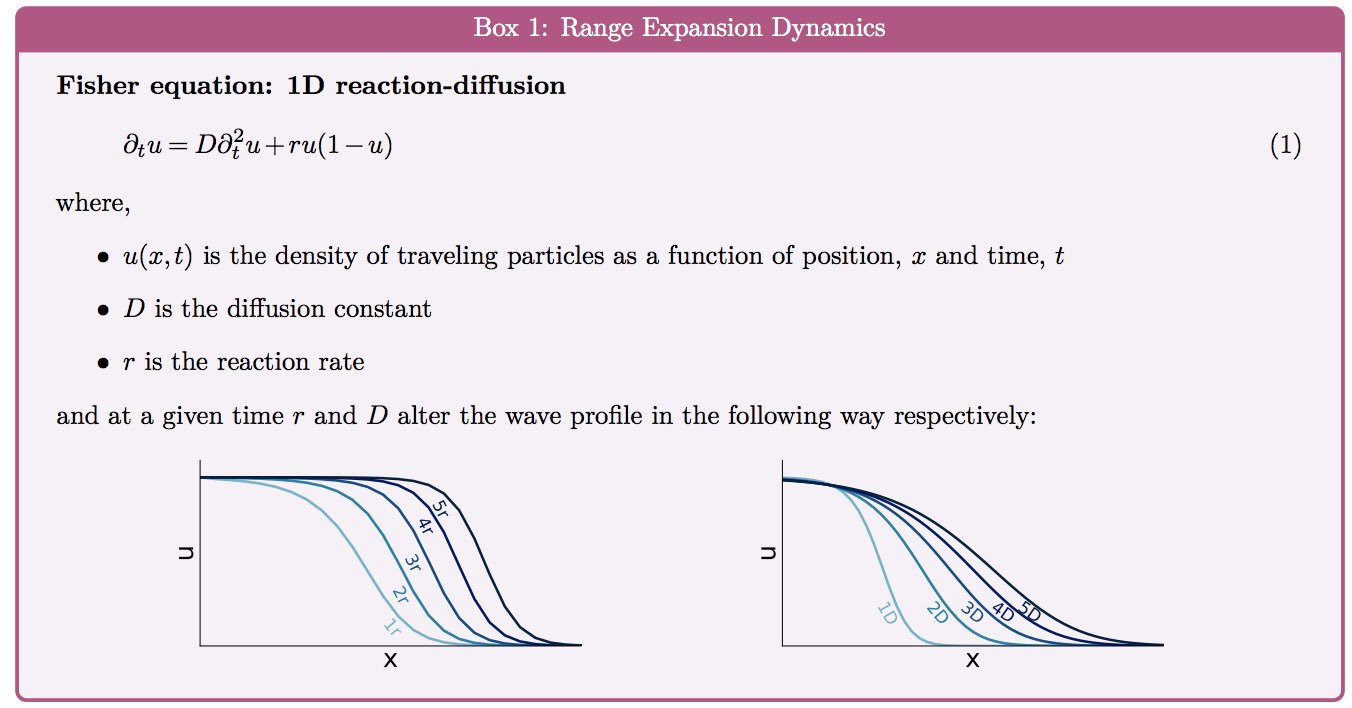

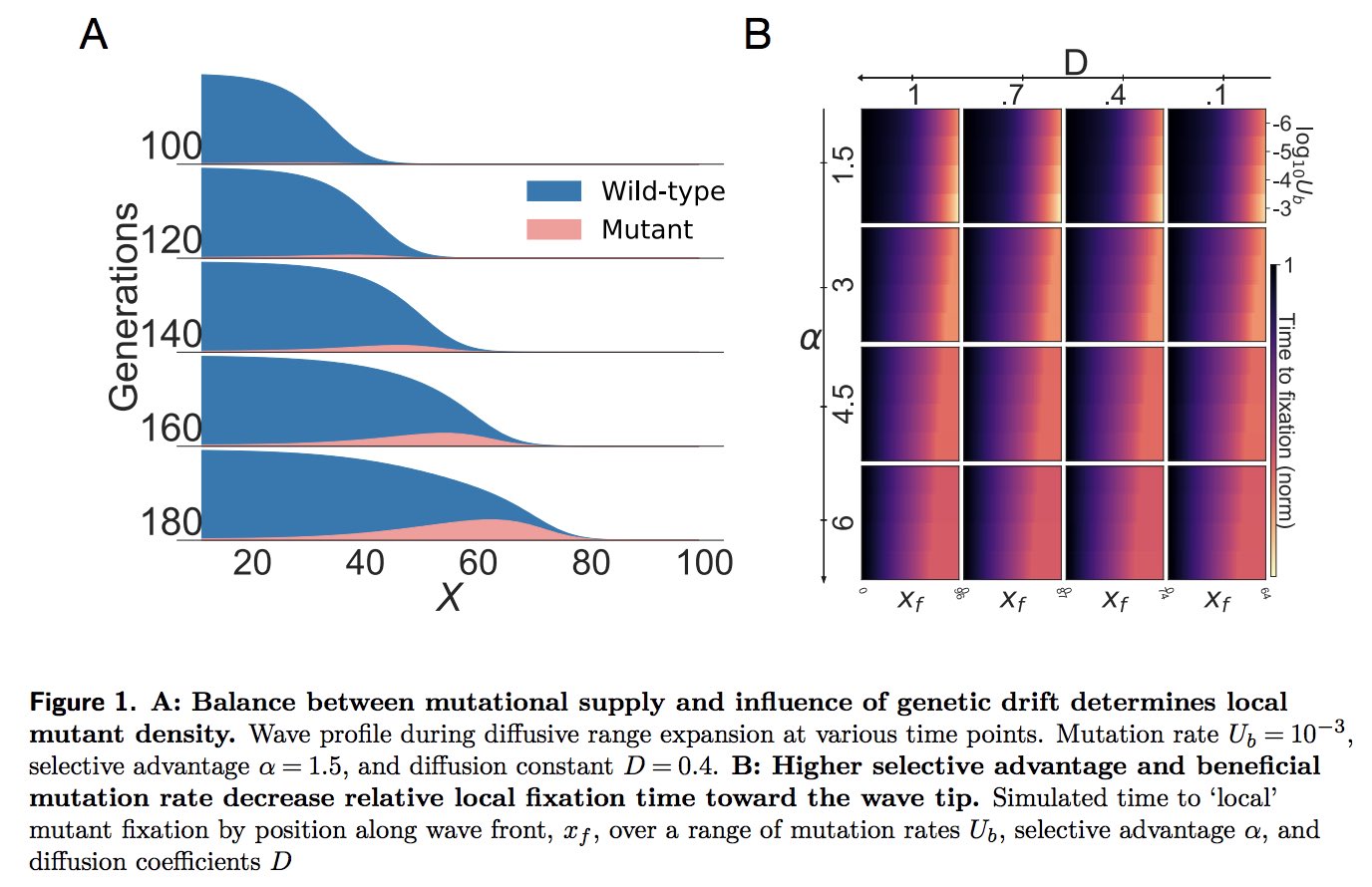

3rd aim will probe the evolutionary stability (in space (as we've done before on graphs) and time) of the ecological dynamics we measure in our game assays. Implementing replicator-mutator dynamics in collab w/ @stevenstrogatz

- we’ll see how these games change through time. (h/t to @kaznatcheev for the idea!)

This also means that we are looking for someone to join the team... specifically a postdoc with an interest in ecology and evolution and a desire to do some modeling and in vitro experiments. Cancer experience is NOT required, kindness and inclusivity are. Please spread the word or check out lab website and get in touch if interested by email.